The AI Workflow Stack Every PM Is Expected to Understand Now

Breaking Down the Layers That Power Today’s AI Products

Most PMs think they’re behind on AI tools.

What they’re actually behind on is how work flows now.

Let’s start with something uncomfortable.

Most PMs aren’t struggling with AI because they’re bad at prompting.

They’re struggling because the PM job quietly changed, and nobody stopped to explain how.

The teams didn’t announce it.

Job descriptions didn’t update clearly.

No one said, “Hey, your mental model for product work is outdated.”

But expectations shifted anyway.

Suddenly, PMs are expected to:

reason about model behavior

understand why outputs drift

debug “AI bugs” that aren’t really bugs

decide when humans should step in

explain why something worked last week but broke today

And many PMs are left thinking:

“I’m doing everything right… So why does this feel harder?”

This post is about that gap.

The mistake PMs are making

Most PMs approach AI like this:

“What AI feature should we add?”

That framing worked in the old world.

In the new world, the real question is:

“How does work move through this system - and where does AI change the flow?”

That’s the shift.

AI didn’t just add features.

It rewired workflows.

And PMs are now expected to understand the AI workflow stack, whether or not anyone calls it that.

Why “knowing AI” isn’t enough anymore

You can:

know what an LLM is

understand RAG at a high level

use ChatGPT daily

…and still struggle badly as a PM on an AI product.

Because what matters now isn’t knowledge of components.

It’s understanding how they connect.

The job moved from:

managing outputs

to

managing systems that produce outputs

That’s a different skill entirely.

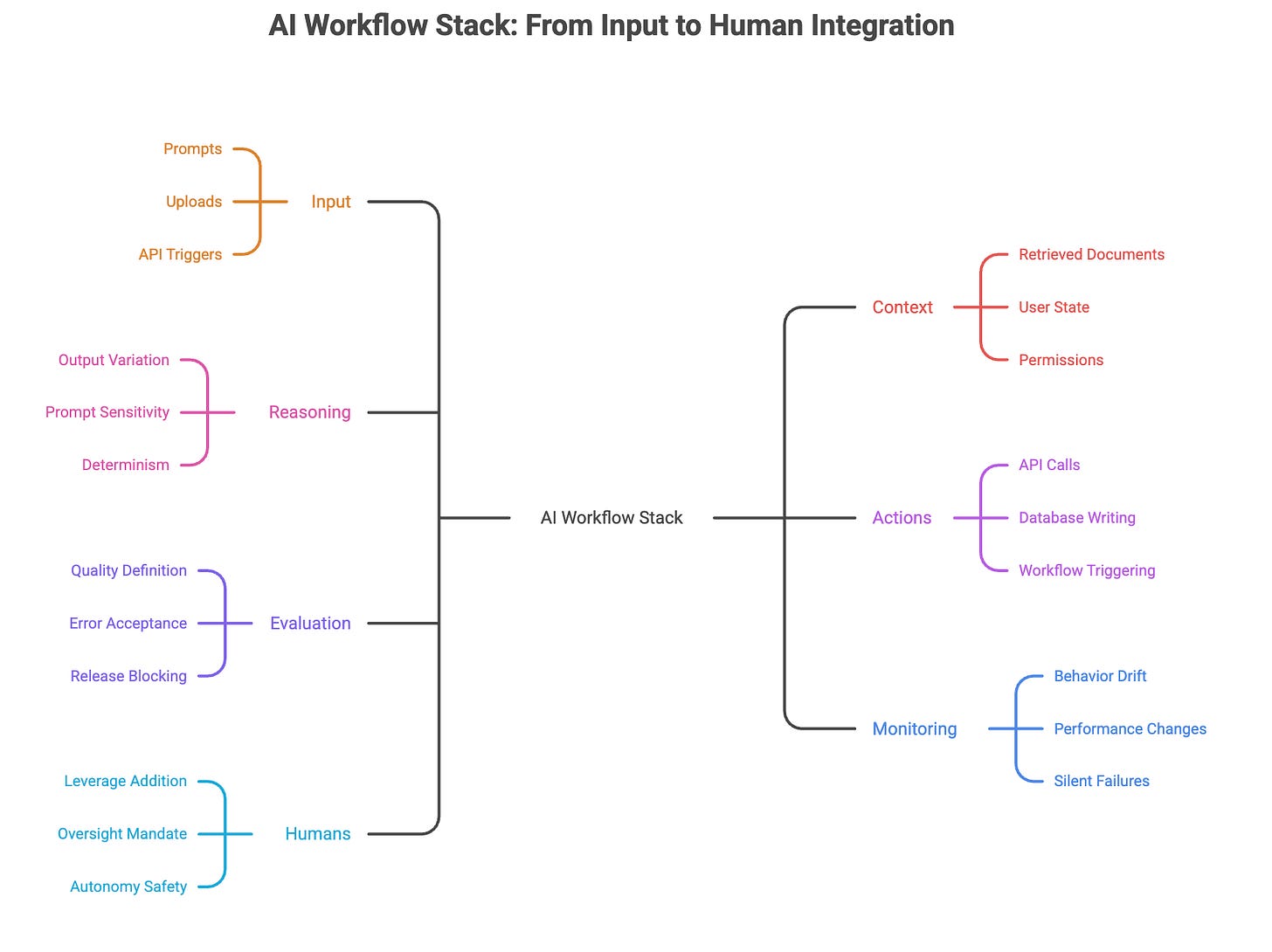

The AI workflow stack

Every modern AI product - chatbots, copilots, agents, internal tools - runs on the same basic flow.

Not theory.

Not buzzwords.

This is what actually happens in production.

1. Input: where messiness begins

This is where users interact:

prompts

questions

uploads

events

API triggers

Here’s the thing PMs underestimate:

Most AI failures start here.

Ambiguous input.

Conflicting intent.

Users asking for things the system was never designed to handle.

Good PMs obsess over:

what inputs are allowed

when the system should ask clarifying questions

how failure is communicated

what “good input” even means

This is not UX polish.

This is system survival.

2. Context: what the AI actually knows

This layer decides whether the AI is:

helpful

confident

hallucinating

useless

Context includes:

retrieved documents

user state

history

permissions

memory

system instructions

Most PMs think hallucinations are a “model problem”.

They’re usually a context problem.

PMs who understand this stop arguing about models and start fixing data flow.

3. Reasoning: where unpredictability comes from

This is the LLM itself.

And here’s the hard truth:

Bigger models don’t save bad workflows.

PMs don’t need to tune weights.

But they do need to understand:

why outputs vary

why small prompt changes cause regressions

why “it worked yesterday” means nothing

why determinism is rare and dangerous to assume

This is where PMs learn to stop promising certainty.

4. Actions: where AI stops being a demo

Talking AI is easy.

Doing AI is hard.

This layer is about:

API calls

writing to databases

triggering workflows

sending messages

executing steps

This is where PMs decide:

what the AI is allowed to do

what needs approval

what can be undone

what absolutely must not fail

This is product judgment - not engineering detail.

5. Evaluation: the part PMs don’t realize they own

Traditional products had QA.

AI products have continuous evaluation.

Someone has to decide:

what “good” looks like

what failure is acceptable

which errors matter

when to block releases

when to roll back behavior

That “someone” is increasingly the PM.

If you don’t define quality,

the system slowly degrades - quietly.

6. Monitoring: why AI products age fast

AI products don’t break loudly.

They decay.

Quality drops.

Edge cases grow.

User trust erodes.

PMs are now expected to notice:

behavior drift

performance changes

confidence mismatches

silent failures

This is why AI PMs think in loops, not launches.

7. Humans: still in the system, just differently

The best AI products don’t remove humans.

They place them carefully.

PMs decide:

where humans add leverage

where they slow things down

where oversight is mandatory

where autonomy is safe

This is not a safety checkbox.

It’s product design.

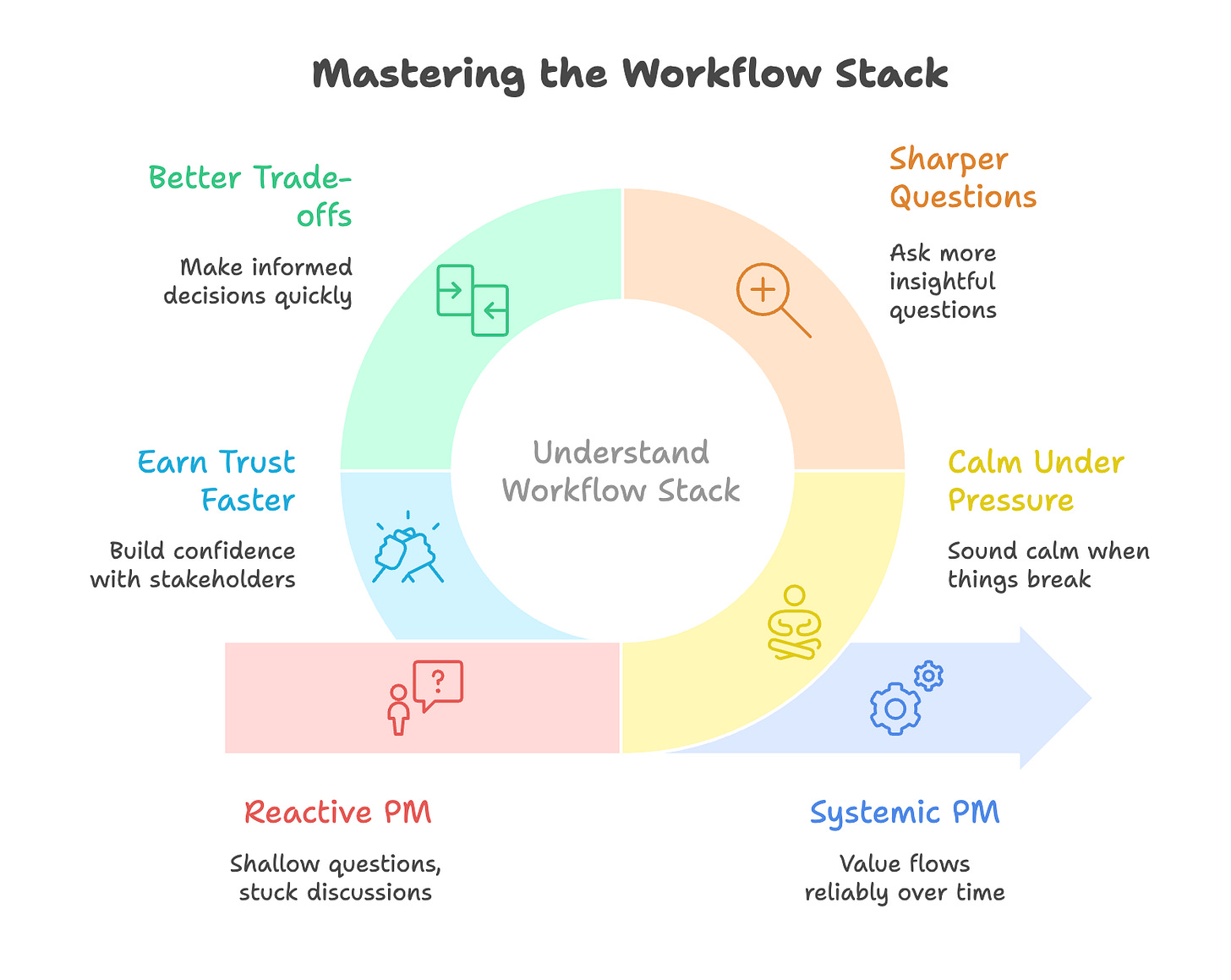

Why this stack changes PM careers

Here’s what no one says out loud:

PMs are already being evaluated on this understanding.

Not formally.

Not explicitly.

But in meetings, reviews, and hiring decisions.

You can see it when:

PMs ask shallow questions

discussions get stuck on tools

quality issues surprise people

failures feel mysterious instead of diagnosable

PMs who understand the workflow stack:

sound calm when things break

ask sharper questions

make better trade-offs

earn trust faster

PMs who don’t:

feel reactive

rely on engineers to explain everything

struggle to lead ambiguity

The real shift

The PM job didn’t become “more technical”.

It became more systemic.

From:

“What should we build?”

To:

“How does value reliably flow through this system over time?”

That’s the new bar.

You don’t need to chase every AI tool.

You don’t need to become an ML expert.

But you do need to understand how AI changes the shape of work.

Because PMs are no longer judged by how well they explain ideas

They’re judged by how well they design systems that keep working.

And this AI workflow stack?

It’s already the baseline.

This framework really hits…once you map how work flows instead of just what tools you use, you unlock where AI actually adds value