How to Conduct A/B Testing in Product Management ♻

A beginner's guide for Product Managers

👋🏻 Hey there, welcome to the #32nd edition of the Product Space Newsletter, where we help you become better at product management.

A/B testing is a fundamental tool in a product manager's toolkit, enabling data-driven decision making and product optimization. This article will walk you through everything you need to know about conducting effective A/B tests, from understanding the basics to analyzing results and making informed product decisions.

What is A/B Testing and Why is it Important in Product Management?

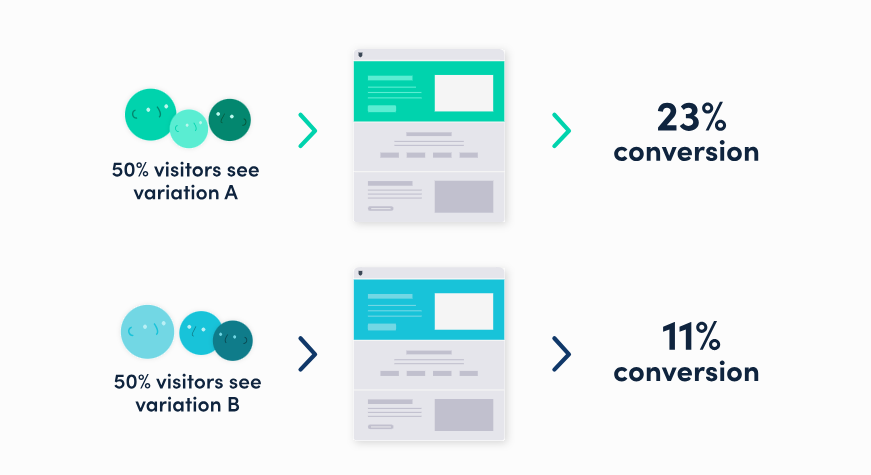

A/B testing, also known as split testing, is a method of comparing two versions of a product feature, webpage, or user experience to determine which performs better. Version A is typically the current version (control), while Version B includes the proposed changes (variant). By randomly showing these two versions to your users and measuring the results, you can gather quantitative data to validate your hypotheses and make data-driven decisions.

For product managers, A/B testing is crucial because it:

Removes Guesswork from Product Decisions: Instead of relying on assumptions or gut instincts, A/B testing provides concrete data to support your decisions. This helps you avoid costly mistakes and ensures you're making changes that will truly benefit your users.

Minimizes Risk by Validating Changes: By testing changes on a subset of your user base before a full rollout, you can mitigate the risk of implementing something that could negatively impact your product's performance or user experience.

Provides Quantitative Data to Support Product Strategy: The insights gained from A/B tests can strengthen your product roadmap, feature prioritization, and overall strategic decision-making. You'll have a better understanding of what resonates with your users.

Helps Optimize User Experience and Business Metrics: A/B testing empowers you to continuously refine and improve various aspects of your product, from user interfaces to content and pricing, ultimately leading to enhanced user satisfaction and better business outcomes.

Creates a Culture of Experimentation and Continuous Improvement: Embracing A/B testing fosters an organizational mindset of experimentation, where product decisions are backed by data rather than assumptions. This mindset drives a culture of continuous learning and improvement.

How Do PMs Use A/B Testing - Use Cases in Product Management

A/B testing can transform how product managers approach decisions across various product aspects, enabling data-driven insights and continuous optimization. Let’s break down these use cases in detail:

User Interface (UI) Changes

To determine the UI design that optimizes user engagement and conversion rates, we test various elements, including button placements, colors, text, font sizes, layout arrangements, form designs, and call-to-action (CTA) placements.

Even minor adjustments to UI elements can have a substantial effect on user behavior, encouraging actions like conversions, sign-ups, or further exploration.

For instance, running a test on the color and placement of the CTA button on a landing page can reveal which design draws the most clicks.

Tip: Start with high-traffic pages to quickly gather data, but remember to account for user behavior differences across devices (mobile vs. desktop).

Feature Optimization

Before committing to a full-scale launch, we evaluate the effectiveness of new features, variations in their behavior, default settings, and specific user flows within the product. This ensures that the feature improves user experience and achieves the desired benefits.

For instance, when rolling out a new feature like a personalized feed or advanced search, an A/B test helps assess its immediate impact on metrics like time spent on the page or frequency of feature use.

Tip: When testing new features, measure secondary metrics like user retention or satisfaction to gain a comprehensive view of the feature’s impact.

Content and Messaging

To boost user engagement, enhance understanding, and drive conversions, we test different variations of headlines, in-product messaging, onboarding instructions, email subject lines, and product descriptions to find the content that resonates best. Effective content and messaging significantly shape user perception and influence their actions.

For instance, optimizing onboarding copy through A/B tests can help users better understand the value proposition, reducing drop-off rates during signup.

Tip: Test across different user segments to capture preferences that may vary by demographics or user journey stage.

Pricing and Packaging

To find pricing structures that optimize revenue while maintaining customer satisfaction and minimizing churn, A/B testing is done on different price points, subscription tiers, bundling options, or discount strategies. Price testing allows you to understand how much users are willing to pay for certain features or product tiers.

For instance, you could test the impact of a free trial versus a discounted first-month rate on sign-ups and long-term retention.

Tip: Use A/B tests to understand how changes in pricing affect not only immediate revenue but also long-term value, such as customer lifetime value (CLV) and churn rates.

Algorithm Changes

To enhance user satisfaction and engagement, we test modifications to search ranking algorithms, recommendation systems, feed algorithms, and personalization logic. With algorithms, even minor changes can lead to significant improvements in user engagement.

For instance, you might A/B test different sorting logic in a recommendation engine to see if personalized suggestions increase click-through rates.

Tip: Keep in mind that algorithm tests can require more time to show reliable results, as users need sufficient exposure to the changes for a measurable impact. Additionally, consider segmenting users to evaluate performance across different behavioral profiles.

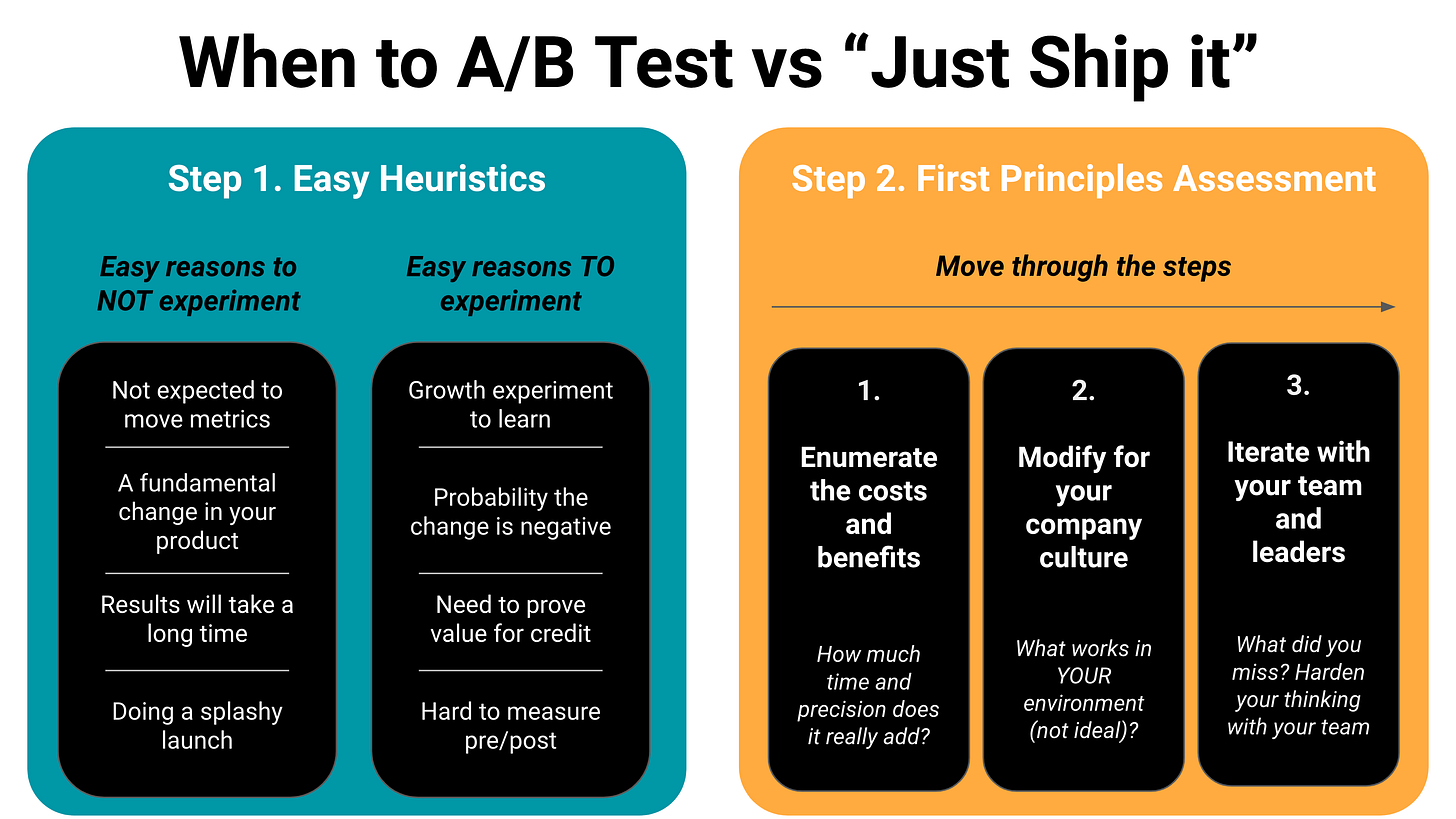

When to Start Using A/B Testing and When Not to Use A/B Tests

When to Use A/B Testing

Sufficient Traffic Volume: A/B testing requires a certain level of traffic to your product to achieve statistical significance and reliable results. Typically, you'll need hundreds or thousands of users per variant to generate meaningful data.

Clear Metrics: Before running an A/B test, you need to have well-defined success metrics that align with your business objectives. These could be conversion rates, engagement metrics, revenue-related KPIs, or any other relevant measures of performance.

Optimization Phase: A/B testing is most effective when you're in the optimization phase of your product development lifecycle, fine-tuning existing features or choosing between multiple viable solutions. It's less suitable for major, transformative changes.

Low-Risk Changes: The test variations you're considering should not severely impact the core user experience. Ideally, the changes should be reversible so you can quickly revert if needed.

When Not to Use A/B Testing

Low Traffic: If your product or feature has very low traffic, you may not be able to achieve statistical significance within a reasonable timeframe. In such cases, A/B testing may not be the most suitable approach.

Major Product Changes: For complete redesigns, fundamental feature changes, or alterations that affect the core user experience, A/B testing may not be the best fit. These types of transformative changes are often better suited for other user research methods.

Technical Limitations: If implementing the necessary test variations is overly complex from a technical standpoint, or if you're unable to track the proper metrics, A/B testing may not be feasible.

Time Constraints: When immediate decisions are required, and the cost of delaying the decision outweighs the potential benefits of A/B testing, it may be more appropriate to rely on other decision-making approaches.

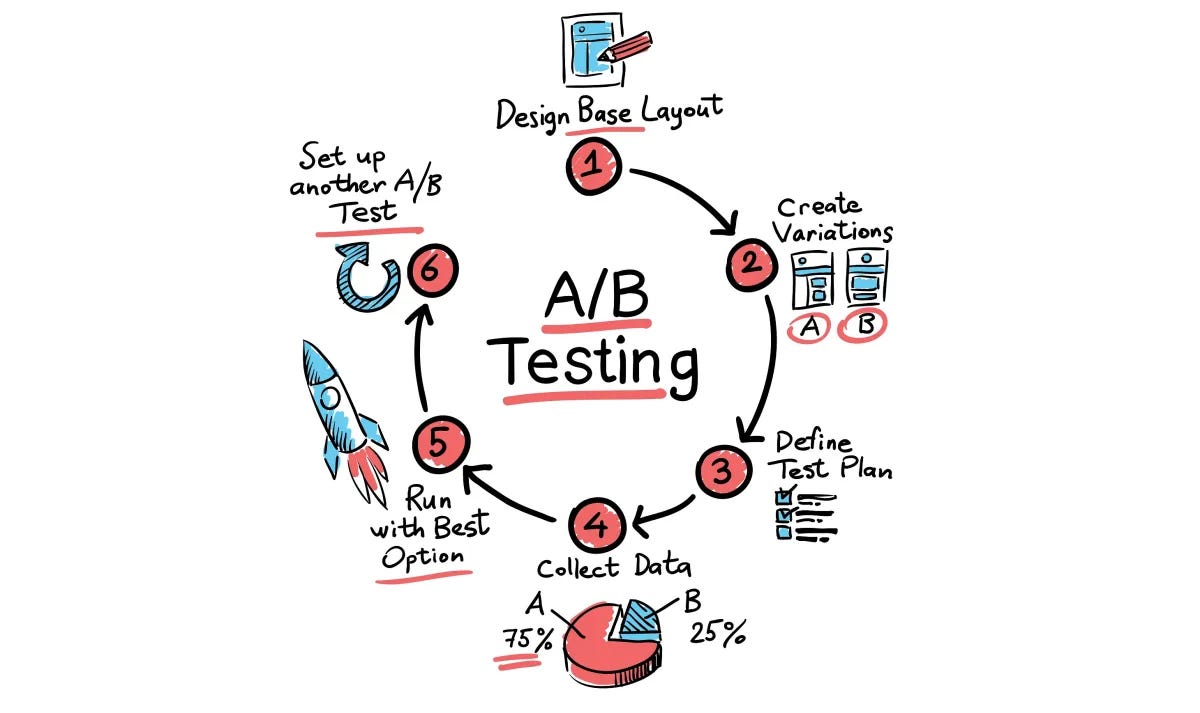

How to Run an A/B Test

1. Planning Phase

Define the Problem: Start by clearly identifying the specific issue or opportunity you want to address. Document the current performance metrics and set clear objectives for improvement. This will help you establish a solid foundation for your A/B test.

Form a Hypothesis: Develop a clear hypothesis that states your assumption about the expected outcome of the test. For example, "Changing the checkout button color to green will increase conversion rate by 5%." Your hypothesis should include the expected outcome and the reasoning behind it.

Determine Success Metrics: Identify the primary metric you want to improve (the main goal), as well as any secondary metrics that could be affected (potential side effects) and guardrail metrics to ensure no negative impact on other areas of your product.

2. Design Phase

Sample Size Calculation: Use statistical power analysis to determine the required sample size for your A/B test. This will ensure you have enough users in each variant to detect a meaningful difference with a high degree of confidence. Consider factors like the minimum detectable effect, significance level, and statistical power.

Test Duration Planning: Calculate the minimum test duration required to achieve statistical significance, taking into account factors like weekly/daily traffic patterns, seasonal variations, and user behavior cycles. This will help you plan an appropriate testing timeline.

Control for Variables: Identify any potential confounding variables that could influence the test results, and plan to control for them. Ensure equal distribution of traffic between the control (A) and variant (B) groups to avoid systematic biases.

3. Implementation Phase

Technical Setup: Implement the necessary tracking mechanisms, set up the variant distribution system, and test the implementation thoroughly to ensure everything is working as expected.

Quality Assurance: Verify proper tracking, check for any technical issues, and ensure a consistent user experience across both the control and variant groups.

Documentation: Document all the test parameters, implementation details, and any unexpected issues encountered during the setup process. This will help you analyze the results more effectively and learn from the experience.

4. Monitoring Phase

Regular Checks: During the test, monitor the progress daily, checking for any technical issues or unexpected results. This will allow you to quickly identify and address any problems that may arise.

Sample Ratio Mismatch: Regularly verify that the traffic split between the control and variant groups remains consistent throughout the test. Look out for any systematic biases that could skew the results.

Analyzing A/B Test Results

1. Statistical Analysis

When analyzing your A/B test results, focus on the following key metrics:

Statistical significance: Determine if the observed difference between the variants is statistically significant, typically measured at a 95% confidence level.

Confidence intervals: Understand the range of values within which the true effect size is likely to fall.

Effect size: Quantify the magnitude of the difference between the variants, which is essential for evaluating practical significance.

Sample size adequacy: Ensure you had enough users in each variant to achieve reliable results.

Common Pitfalls to Avoid: Be wary of the following common issues when analyzing A/B test results:

Stopping tests too early: Concluding the test before reaching statistical significance can lead to false positives or negatives.

Multiple testing problems: Running too many tests without adjusting the significance level can increase the chances of finding false positives.

Simpson's paradox: A situation where the overall trend in the data may be reversed when looking at subgroups.

Selection bias: Unintentionally skewing the sample population, which can impact the generalizability of the results.

2. Interpretation Guidelines

While statistical significance is crucial, it's essential to also consider the practical significance of your A/B test results. Evaluate the business impact of the observed effects and assess the long-term implications for your product and users.

Review the test results across different user segments to identify any variations in the effects. This can reveal opportunities for personalization or targeted optimization strategies.

3. Decision Making

After thoroughly analyzing the A/B test results, determine if the test was a success. Consider the following questions:

Was statistical significance achieved?

Was the practical significance (i.e., business impact) meaningful?

Were there any negative impacts on other key metrics?

If the test was successful, roll out the winning variant and document the key learnings. If the results were inconclusive or showed negative impacts, you may need to revisit your hypothesis, test design, or consider running follow-up tests.

Tools to Run A/B Tests

Optimizely, VWO (Visual Website Optimizer), Adobe Target, Google Optimize 360. These enterprise-level solutions offer advanced features, enterprise-grade security, and scalability, making them suitable for large organizations with complex testing requirements.

LaunchDarkly, Split.io, Firebase A/B Testing, Amplitude Experiment. Designed for mid-sized businesses, these tools provide a balance of functionality and affordability, making them accessible to a wider range of product teams.

GrowthBook, Wasabi, PlanOut, Google Analytics Experiments. Open-source A/B testing tools can be a cost-effective option, particularly for smaller teams or those with specific technical requirements. However, they may lack some of the advanced features and support of commercial solutions.

When choosing an A/B testing tool, consider factors such as your traffic volume, technical requirements, budget constraints, integration needs, and the level of analysis capabilities you require.

That’s a wrap for today!

Do you have a question or a handy tip to share about A/B testing? We’d love to hear from you!

Share in the comments below or reply to this email.

Until next time, keep innovating, keep iterating, and above all, keep being awesome.

Cheers!

Product Space